Pushing the Frontier of Exact Yet Flexible Probabilistic AI with Subtractive Deep Models

Aleksanteri Sladek’s HIIT funded Master’s thesis research explores increasing the expressiveness of deep tractable probabilistic models.

The thesis work “Positive Semi-Definite Probabilistic Circuits”, written by Aleksanteri Sladek, investigates approaches to increasing the class of probability distributions that admit a tractable (exact and efficient) representation as a probabilistic circuit (PC) by exploring links to kernel methods. The work was completed at the Aalto University Department of Computer Science under the supervision of Prof. Arno Solin and Dr. Martin Trapp.

Probabilistic modelling and the representation of uncertainties is at the very core of trustworthy and reliable AI. To infer quantities of interest from a probabilistic model, basic rules of probability can be applied, which often involve marginalizing (integrating out) variables. For example, one could have a probabilistic model of traffic jams across time within a road network and wish to infer the average probability of a traffic jam on a given road. For many probabilistic models, however, obtaining such marginal probabilities is computationally prohibitively expensive, and exact probabilities cannot be computed from the model. Hence, this constitutes one of the major challenges in their use in real-world settings where reliability and efficiency of probabilistic inference is critical.

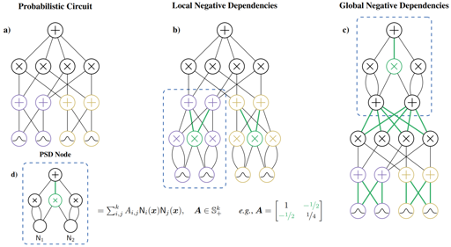

Tractable probabilistic models, such as PCs, aim to provide a remedy to this problem, as they guarantee that certain inference operations can be computed exactly and efficiently. This is achieved by enforcing constraints on their formulation. As such, these models allow for efficient marginalization (integration) of any subset of variables, meaning quantities such as conditional probabilities and the normalization constant of a learned model can be exactly (without approximation) and efficiently (in polynomial time) computed. Enforcement of these constraints, however, is also known to reduce their capacity to represent a large class of probability distributions, especially in continuous data domains.

The research work focused on loosening the non-negative weight constraint enforced on PC parameters (which ensures the non-negativity of the PC’s outputs) to allow for subtractions to occur within the model whilst still ensuring the non-negativity of the model’s outputs. This was hypothesized to increase the representational flexibility of the model whilst still retaining its tractability. The work presented preliminary results on synthetic data sets, comparing PCs with negative parameters and PCs with non-negatively constrained parameters, showing increased performance of the novel model class with a mild increase in computational cost.

The work was also published at the 6th Workshop for Tractable Probabilistic Modelling held at the Conference on Uncertainty in Artificial Intelligence (UAI) 2023 in Pittsburgh, Pennsylvania, USA. It is also slated to be presented as an oral at the scientific track of the Finnish Center of Artificial Intelligence’s AI Day -event, which is hosted on the 8th of November 2023.

More information:

Aleksanteri Sladek (aleksanteri.sladek@aalto.fi)

Martin Trapp (martin.trapp@aalto.fi)

References:

Aleksanteri Sladek (2023). Positive Semi-Definite Probabilistic Circuits. Master’s thesis. Department of Computer Science, Aalto University. Espoo, Finland. http://urn.fi/URN:NBN:fi:aalto-202308275275

Aleksanteri Sladek, Martin Trapp, and Arno Solin (2023). Encoding negative dependencies in probabilistic circuits. In the 6th Workshop on Tractable Probabilistic Modeling at Uncertainty in Artificial Intelligence (UAI). Pittsburgh, Pennsylvania, USA. https://openreview.net/pdf?id=Jpd7qHaK46