Machine Learning and Algorithms: You Scratch My Back, I’ll Scratch Yours

Scaling up machine learning methods to deal with big data scenarios requires solving many challenging algorithmic problems. However, machine learning can also help speed up algorithms. A case where machine learning and algorithms research benefit each other is nearest neighbour search.

Algorithms researchers investigate ways to solve tasks efficiently using as little computational resources such as compute time and memory as possible. Every computer science student has tried doing this for classic tasks like sorting a list alphabetically or finding shortest paths for a traveling salesman. Machine learning researchers are heavy users of algorithms since a machine learning algorithm may involve multiple elementary algorithms as subroutines. Solving all of them efficiently is a necessity to process large data sets.

A good example of an algorithmic problem is the nearest neighbour retrieval task: Given a large corpus of data, usually represented as set of high-dimensional vectors, and a query point x, the task is to identify the items in the corpus that are nearest to x. Solving this task can be used for recommending similar pieces of music based on the song you’re currently listening on Spotify [1], or to find similar sentences to enrich training data used to fine-tune machine translation systems [2], and in countless other practical use cases.

The key to fast nearest neighbour retrieval times is to construct an index data structure based on the corpus, which can take a while, and then use the index many times for rapid queries. This resembles the idea of an alphabetically sorted catalogue – if you are old enough, you can think of a telephone directory. Sorting the items can be tedious, but once it’s done, the catalogue can be used to quickly find a particular item without having to scan through the entire data set. There exist a great variety of algorithms, each more ingenious than the next, for solving the nearest neighbour problem, from hashing-based techniques such as locality sensitive hashing (LSH) [3] to graph-based ones such as the navigable small world graphs (NSWG) method [4]. Most of them solve the nearest neighbour task only approximately, but in return for a small margin of error, these algorithms allow several orders of magnitude faster query times than the exact brute-force approach. In most cases, queries can be processed in less than a millisecond time.

The machine learning researcher – and perhaps even more so the practitioner – owes a great deal to the algorithms people for coming up with such convenient, general purpose algorithms. Fast nearest neighbour queries can be used in many machine learning methods including – yes, if you know a bit about machine learning, you probably guessed it already – the nearest neighbour classifier.

However, this is where the research of the doctoral candidate Ville Hyvönen and his advisor Prof Teemu Roos takes a slightly different turn. They are working together with Elias Jääsaari (formerly at HIIT and currently working as a doctoral candidate at the Machine Learning Department of the Carnegie Mellon University, USA) in a project that has received funding also from HIIT. In their prior work, Hyvönen and others have devised nearest neighbour methods that involve ensembles of random projection trees and a voting scheme to identify the most likely nearby corpus points [5]. This method has already found use in, for instance, the aforementioned machine translation system by Microsoft Research, and it has recently been ported to Julia [6] and Haskell [7]. The different turn that Hyvönen and Roos are currently exploring is to turn the tables and let machine learning do the heavy lifting in order to speed up their nearest neighbour algorithms.

So instead of only machine learning methods benefiting from algorithms, they are contributing to faster and more accuracy algorithms by applying existing machine learning methods. You scratch my back and I’ll scratch yours.

Following their earlier work that makes use of ensembles of random projection trees, the team is applying random forests to construct novel types of nearest neighbour algorithms [8]. (The random forest is a machine learning technique that involves multiple tree-structured models to construct a single, powerful classifier.)

There are several advantages that follow from approaching the nearest neighbour task as a machine learning problem. First, it provides a unified framework to interpret and analyse a number of existing nearest neighbour algorithms including the ones proposed by Hyvönen and others. Second, it directly points to certain improvements in the said algorithms, which make them faster and more accurate. Third, it enables tuning the algorithms for specific types of queries by explicitly modelling the distribution of the queries: since the algorithms will always perform better for some queries and worse for others, it’s smart to focus especially on the queries that tend to occur more frequently than on ones that are hardly ever encountered.

With their new machine learning approach, Hyvönen, Roos, and Jääsaari are developing fast and accurate nearest neighbour algorithms. By making their algorithms openly available as an open source software package, they hope to see them in a wide range of practical applications that involve the age-old nearest neighbour problem as a subroutine, and indirectly, to more or less every area of science and engineering from astrophysics to sociology and from fusion research to personalized medicine.

But first, it’s back to the whiteboard to check one more theorem… would the Vapnik-Chervonenkis dimension work here?

Contact: Prof. Teemu Roos, University of Helsinki

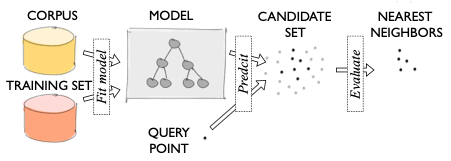

Figure: The computational pipeline. The machine learning task is to learn a model that predicts which corpus points are the most likely nearest neighbours of a given query point. After the model has been constructed, it can be used to narrow down the search into a candidate set of likely nearest neighbours, which is much faster than scanning the entire corpus.

[1] Erik Bernhardsson. Annoy C++ library.

[2] Hany Hassan, Mostafa Elaraby, Ahmed Tawfik. Synthetic Data for Neural Machine Translation of Spoken-Dialects, ArXiv pre-print.

[3] Piotr Indyk, Rajeev Motwani. Approximate nearest neighbors: towards removing the curse of dimensionality, STOC’98.

[4] Yury Malkov, Alexander Ponomarenko, Andrey Logvinov, Vladimir Krylov. Approximate nearest neighbor algorithm based on navigable small world graphs, Information Systems 45, 2014, pp. 61–68.

[5] V. Hyvönen, T. Pitkänen, S. Tasoulis, E. Jääsaari, R. Tuomainen, L. Wang, J. Corander, and T. Roos. Fast nearest neighbor search through sparse random projections and voting, IEEE Big-Data 2016.

[6] D.J. Passey. Shrike Julia package.

[7] Marco Zotta, rp-tree Haskell package.

[8] Ville Hyvönen, Elias Jääsaari, Teemu Roos. Approximate Nearest Neighbor Search as a Multi-Label Classification Problem, ArXiv pre-print.